I’ve been messing around with an old proof-of-concept project I started back when I was at Meta, and it’s finally starting to take shape into something way cooler than I originally envisioned. The project is called HelpAR—basically a mixed reality technical support guide that helps users self-fix common computer issues, specifically when they’re working in Mixed Reality (MR) or Virtual Reality (VR).

I’ve been messing around with an old proof-of-concept project I started back when I was at Meta, and it’s finally starting to take shape into something way cooler than I originally envisioned. The project is called HelpAR—basically a mixed reality technical support guide that helps users self-fix common computer issues, specifically when they’re working in Mixed Reality (MR) or Virtual Reality (VR).

The Origin: Meta Days

It all started when I was working at Meta as part of an internal experiment. I got pulled off the Helpdesk team in Austin and was told to “build something cool” with unity related to tech support. Pretty broad, right? I didn’t have a detailed roadmap or a real end goal, but I knew I wanted to tackle something in the space of technical troubleshooting, maybe mix it up with MR/VR.

So, the idea behind HelpAR was born.

The Proof of Concept

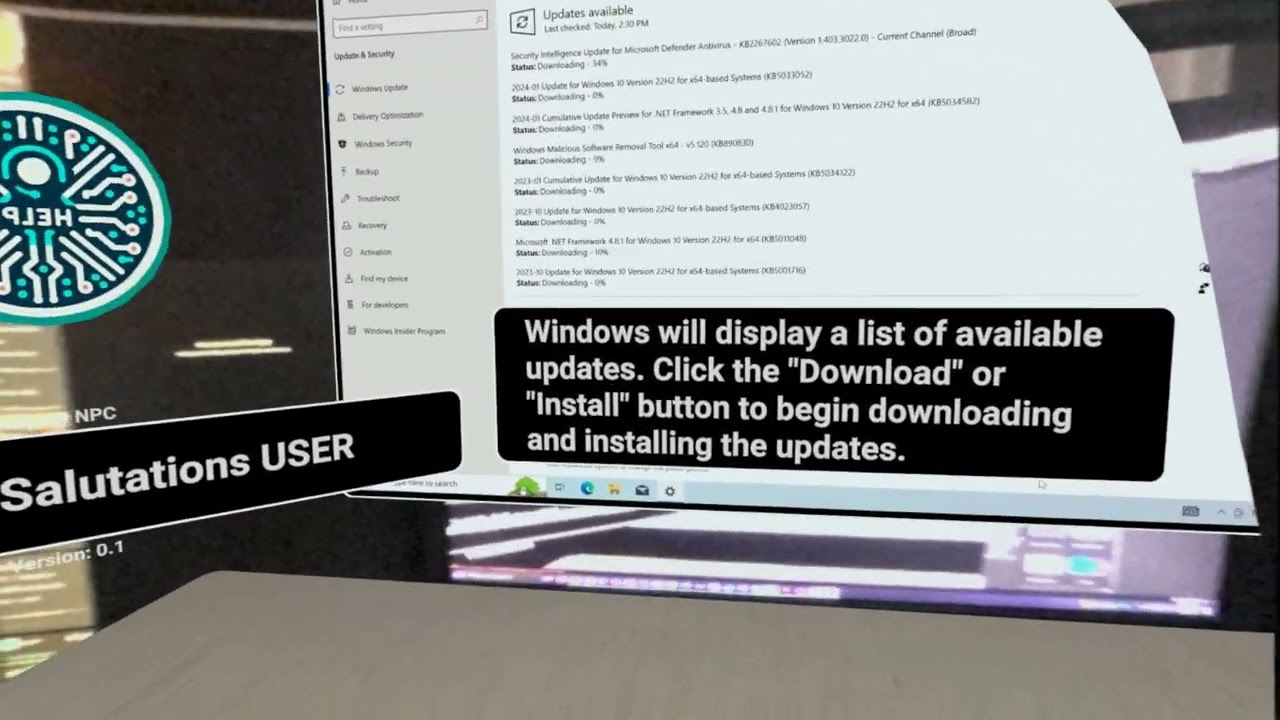

At the time, I was in a Systems Engineer apprenticeship, juggling multiple projects—pitching ideas and building proofs of concept for different teams to hopefully get brought in to work with them—so my focus was pretty scattered. Despite that, I managed to get a basic version of HelpAR up and running:

- You could summon the Guide AI.

- You’d get to pick from two common issues.

- Then you’d see a slideshow of the fix with the Guide UI overlaid on your screen.

And that was it. The idea never really got built out further. I was working through the tail end of Meta’s three rounds of layoffs, and by the time my apprenticeship was ending, I knew there was no chance of getting brought onto any of the teams—most of them had been laid off, and there was a hiring freeze. So, I just coasted for the remainder of my apprenticeship, and the project died when I left Meta.

The Concept

The main goal? Help users troubleshoot their own problems in real time, using MR to guide them through the process. Here’s how it was supposed to work:

- User encounters a tech issue: Let’s say you’re working on your computer in a VR headset, and something goes wrong—an update fails, your Wi-Fi isn’t connecting, or you’ve got one of those dreaded blue screens.

- Summon HelpAR: You’d be able to call up the HelpAR guide by saying something like “HelpAR, fix my network” or using some kind of gesture or command. Think of it as a VR assistant designed for IT support.

- Guide to Resolution: HelpAR would then ask for a rough idea of the issue and get access to your desktop. Once connected, it would pull up the necessary guide and overlay instructions directly onto your screen. This could include arrows pointing to what you need to click, floating buttons like “Click Here,” and step-by-step instructions projected onto your desktop.

- Fancy UI: It wasn’t just a boring text list. There were visual cues like floating arrows, text boxes, and interactive guides to hold the user’s hand through each step. It made the whole process more intuitive and less overwhelming, especially for users not comfortable with troubleshooting.

Backup Plan: Call a Human

Now, of course, not all issues can be solved by an AI guide. Sometimes you just need human intervention. So, if the self-fix didn’t work, you could summon a real-life support agent who would show up as an avatar. This would make the experience more like a face-to-face chat instead of your typical call center or text-based support. The agent would be able to:

- Request access to view your desktop (pretty standard in tech support).

- Take control of the user’s system if necessary, with permission, of course.

Rebuilding It: Take Two

Fast forward a year. On a random weekend, I decided to pick the project back up from scratch. This time around, I had ChatGPT at my disposal, which made things move a lot faster. What took me a month before, I was able to knock out in a weekend.

Improvements This Time Around

- AI-generated voice: I added a voice feature this time, so the guide can actually talk the user through each step instead of relying entirely on text and visuals. It adds a more personal touch to the troubleshooting process.

- Faster development: With the help of AI, I was able to reach the same point as before (AI summoning, issue selection, and UI overlay) but in a fraction of the time.

Honestly, it feels like a totally different project now. Having the AI generate dialogue and assist with some of the code has been a game-changer. I’m pushing forward way faster than I expected, and it’s exciting to see where this could go with just a bit more effort.