I recently went down the rabbit hole of AI-generated images to create some deepfakes of myself—basically, fun shots of me “living life” and touching grass. The problem? None of the AI image generators could nail my sideburns. Every time I tried to generate images, they’d either mess up my facial hair or make me look like a different person entirely. So, I figured the best way to get accurate results was to train a custom AI model using images of my own face.

Turns out, there’s a pretty straightforward way to do this thanks to a Google Colab notebook someone way smarter than me put together. I fed it a bunch of images of myself, and the tool spit out a custom-trained model I could use to make surprisingly good (and pretty funny) images of me.

The Sideburn Struggle: Why I Needed a Custom Model

If you’ve tried using AI tools like DALL·E or MidJourney to generate images, you’ve probably noticed they can struggle with details—especially when it comes to things like facial hair. I have pretty distinct sideburns, and none of the existing tools could generate them correctly. It was frustrating because, no matter how hard I tried to describe them in the prompt, the AI just couldn’t handle it.

That’s when I realized I needed to take things a step further and train a model specifically on my face. Stable Diffusion was the perfect base for this because it’s open-source and has a ton of flexibility for customization.

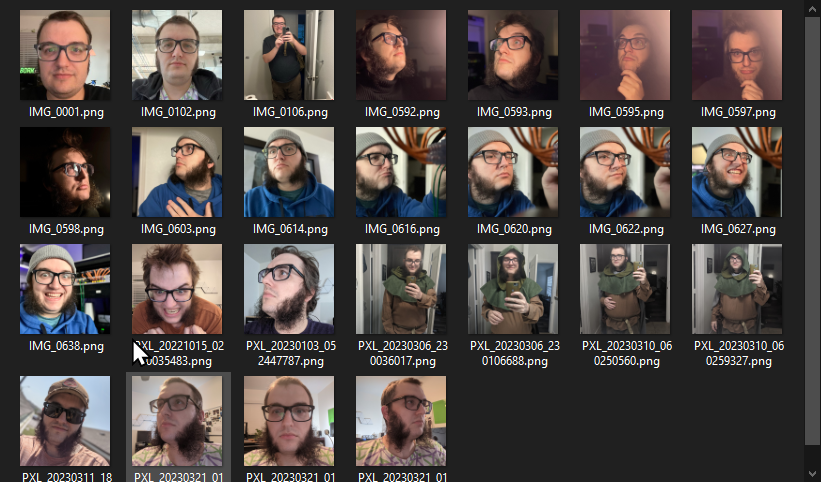

Gathering Training Data: All the Photos of Me

The first step was rounding up a bunch of pictures of myself. The more variety, the better—because the AI needs to learn how my face looks from different angles and in different lighting. Here’s what I did:

- Collected photos from different angles: I gathered as many photos of myself as I could find—everything from selfies to random shots friends had taken. The key was making sure I had enough profile shots so the model could learn how to handle my sideburns from different perspectives.

- Cropped and resized: I cropped the images to focus on my face and resized them to the right dimensions for the model to process. This step is important because it helps the AI hone in on what really matters—my face, not the background.

Using a Google Colab to Train the Model

Now, I’m no machine learning expert, but luckily, there’s a Google Colab out there that makes the training process a lot easier. Someone much smarter than me had already set up the whole thing, so I just followed the instructions, fed in my images, and let the Colab handle the rest.

- Feeding the images: I uploaded all the cropped photos of myself to the Colab, and it processed them to create a model that could (hopefully) generate accurate images of my face.

- Training process: The Colab handled all the heavy lifting—training the model based on the images I provided. It took some time for it to work through the data and fine-tune the details, but once it was done, I had a custom model that understood my facial structure—and, finally, my sideburns!

Here’s a look at the first batch of images the tool spat out after processing the photos I gave it:

Plugging the Model into Automatic1111 for Image Generation

Once the custom model was trained, I plugged it into Automatic1111, which is a powerful interface for using Stable Diffusion models. This gave me a lot of control over the images I wanted to generate, and I could tweak the prompts to get more accurate results.

- Experimenting with prompts: I had fun experimenting with different prompts like “me hiking on a mountain” or “me relaxing on a beach,” and watching how the AI recreated those scenarios with my face.

- Adjusting settings: Automatic1111 made it easy to fine-tune things like composition, lighting, and even style, which helped me get more realistic results. It was really satisfying to see the AI generate images of me doing things I wouldn’t normally have photos of—like “touching grass” or just being out in nature.

Results: Deepfake Me, Finally Touching Grass

After a bit of trial and error, I finally got some pretty solid results. The AI-generated images were not only recognizably me, but they actually captured my sideburns, which was a huge win. I can now create semi-realistic images of myself doing all kinds of activities—basically living my best life in AI form.

Here’s one of the better images I got out of the process:

What’s Next?

Now that I’ve trained a model that can generate fairly realistic images of me, I’m thinking about experimenting with even more creative scenarios. I might dive into animation next, or maybe work on generating images of myself in wild fantasy settings—who knows?

For now, though, I’m just happy I got my AI-generated sideburns looking right. If you’re interested in training your own model or just want to play around with AI image generation, I highly recommend checking out Stable Diffusion and the Google Colab I used. It’s a fun project, and once you get it working, the possibilities are endless.

Feel free to hit me up if you want tips on how to get started—especially if you, too, want to make sure your AI deepfakes can handle those important details like sideburns!